You Tuned It Once. The Domain Changed a Hundred Times

Retrieval system behavior is determined long before anyone tunes a ranker or debates top-k. It is determined by the boundaries established at the start: segmentation rules, signal weights, and coverage assumptions. Those boundaries encode an internal model of the domain—its structure, its hierarchy, and its expected patterns of meaning.

When the domain evolves and the boundaries remain static, the system maintains fidelity to an obsolete structure. It is not instability. It is consistency applied to a world that has already shifted. The system continues operating within the constraints you gave it, even after those constraints stop matching how the domain expresses meaning.

Boundaries act as the system's implicit worldview. When the worldview falls behind the domain, drift begins—not as an error, but as a steady misalignment between what the system preserves and what the domain now requires.

What Boundary Drift Actually Is

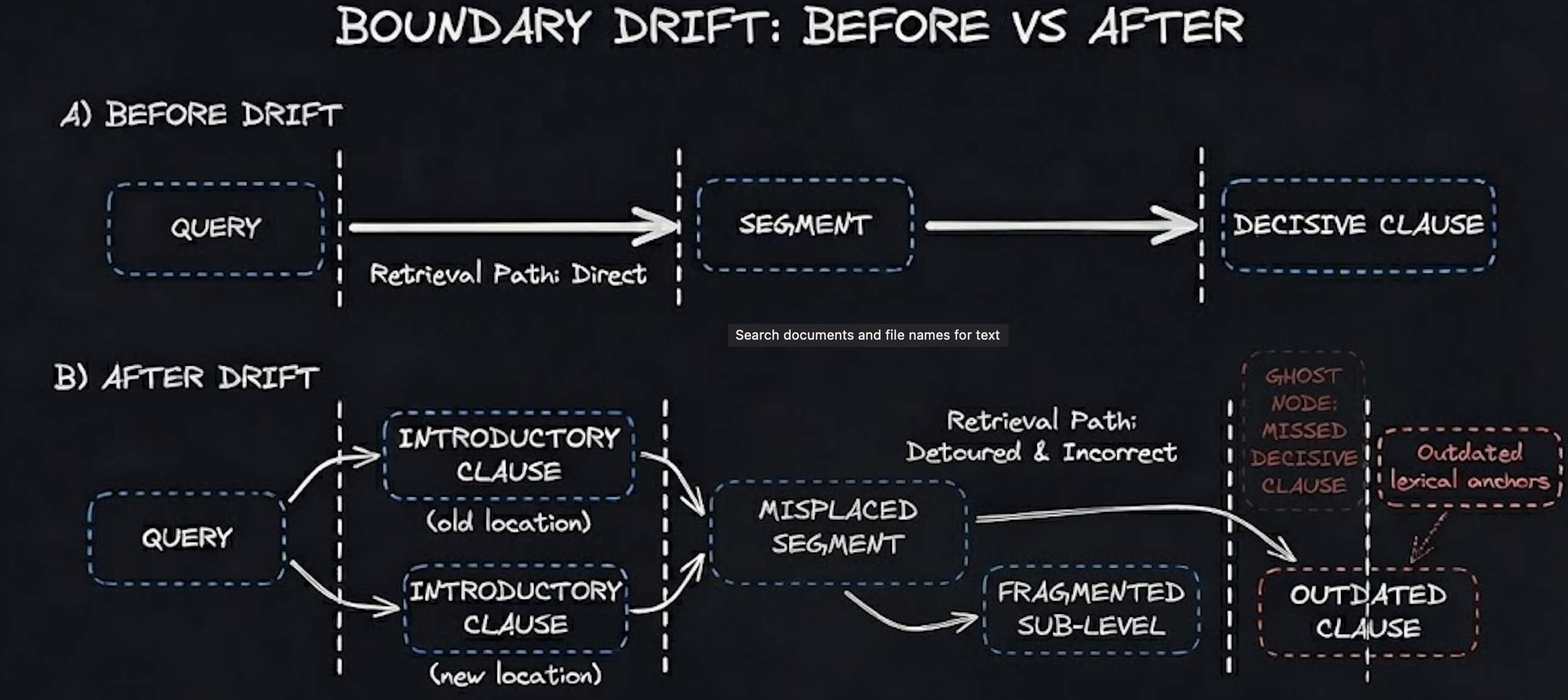

Boundary drift is the structural mismatch that forms when the domain's hierarchy, phrasing patterns, or conceptual topology shifts and the retrieval layer continues executing its original assumptions. Chunking rules calibrated to a previous hierarchy begin segmenting through decisive clauses after heading depths change. Ranking logic tuned to older phrasing elevates passages that survive statistically but no longer represent governing meaning. Hybrid retrieval surfaces boilerplate because embeddings overweight persistent lexical patterns that have lost semantic relevance.

Two early examples illustrate this mechanism. A three-clause regulation that once occupied a single segment is now split across four nested headings; the original chunking logic slices the governing clause away from its qualifying exception, so retrieval surfaces one without the other. A cross-reference chain that previously resolved cleanly now fragments because updated documents introduce new anchors that the existing scorer does not weight correctly, so the chain is broken into semantically weak fragments across the ranked list.

Externally, top-k results look plausible. Score distributions sit within normal variance. Coverage metrics appear unchanged. Internally, the segmentation and scoring regime is no longer aligned with the structure that actually governs meaning. What presents as relevance is often an artifact of an older structure encoded in the boundaries themselves.

To separate meaningful drift from normal variance, boundaries must be monitored numerically rather than intuitively. Corpus-level structural change beyond roughly five percent—new templates, heading depths, or clause layouts—consistently predicts that segmentation no longer preserves decisive text. Anchor-query overlap shifts of thirty percent or more in top-5 results reliably correlate with downstream divergence in system outputs even when end-to-end accuracy appears stable. Score-distribution anomalies—variance collapse or disproportionate elevation of boilerplate in the top-10—indicate that the ranking layer is still honoring an older linguistic distribution. These thresholds expose drift before it becomes operationally expensive.

Metrics for Unstructured and Semi-Structured Domains

Structured regulatory corpora expose drift cleanly because clause boundaries and hierarchies are explicit, but most domains are messier. Product documentation, help centers, research repositories, and support transcripts mix narrative text, tables, screenshots, and ad-hoc headings; drift appears first in how concepts co-locate rather than in whether a specific clause moved one section deeper. Measuring drift in these environments requires metrics that operate on behavior and topology rather than on explicit article–section layouts.

One class of metrics is continuity-based. Query–map continuity measures how stable the path of a query through the corpus remains over time: for a fixed anchor set, track top-k overlap, average rank shift of decisive spans, and depth inflation as new content arrives. A continuity score dropping from 0.9 to 0.7, or a median decisive-span rank moving from 3 to 11, is evidence that boundaries are no longer aligned even if precision@k has not yet fallen. Another class is coverage-based: build topic or cluster models over the corpus and measure what fraction of each cluster's decisive spans are reachable within depth k; when coverage for a cluster falls below a threshold—often around 0.7—or coverage variance across clusters doubles, the system is losing contact with parts of the domain even if no single document has an obvious structural error.

For highly unstructured inputs such as call transcripts or free-form notes, drift appears as semantic dilution. Here, metrics such as semantic compression ratio (how much decisive content per retrieved token), answer–context faithfulness, and prompt-driven test suites that re-probe the same latent decision points become critical. The target is the same: make the system's internal forgetting visible as numbers before users experience it as incoherence.

How Drift Shows Up Before You Can Measure It

Domain experts perceive drift before telemetry does. Reports that answers feel outdated, overly cautious, or generic are early indicators that retrieval is anchored to a superseded conceptual structure. These are not qualitative complaints; they are observations of structural misalignment. Any correction to boundaries must be explicit and reviewable; without oversight, a system can adopt new structures that do not reflect the domain's intent.

The initial displacement is subtle. Queries that once surfaced decisive clauses return fragments with weakened context. Commentary that previously aligned tightly with its governing regulation begins drifting when structural changes move the anchor points. Exceptions reappear because the boundaries that isolated them no longer map to the new document layout. A second behavioral example: internal decision matrices lose continuity. Clauses that should appear sequentially begin surfacing as isolated fragments in top-k because their structural proximity changed while chunking rules remained constant.

Engineering responses often target the symptom rather than the cause. Teams tighten prompts, add filters, or constrain sampling parameters. These interventions suppress downstream noise while leaving upstream misalignment intact. Drift accumulates because the system continues honoring the boundaries it was given. Obedience becomes the failure mode.

The Project That Made Drift Impossible to Ignore

A compliance engine operating on roughly 28,000 documents made the pattern explicit. The corpus consisted of statutes, guidance notes, exception clauses, and quarterly updates. Structural change occurred predictably every three months.

At launch, a suite of 312 anchor queries achieved top-3 clause alignment near 0.92. Latency distributions were tight. Analysts relied on the system as their primary interface to the regulatory corpus. Three months later, analysts described the responses as heavier and more cautious. No telemetry supported the claim. Accuracy remained stable. Latency curves were unchanged. Ranking variance remained within normal bounds.

The fracture lived in the structure. Seventeen percent of the corpus adopted new heading depths and altered syntactic patterns. Chunking rules tuned to the earlier hierarchy bisected clauses carrying regulatory force. Ranking logic trained on the previous linguistic distribution elevated safe but outdated passages. Decisive text drifted deeper, not because it lacked relevance but because its structural cues no longer aligned with the system's frozen assumptions.

The system had not degraded. It was faithfully modeling a regulatory world the domain had already left behind. At this scale, drift stops being a cleanup task and becomes a continuous pressure exerted by a domain moving faster than the boundaries intended to represent it.

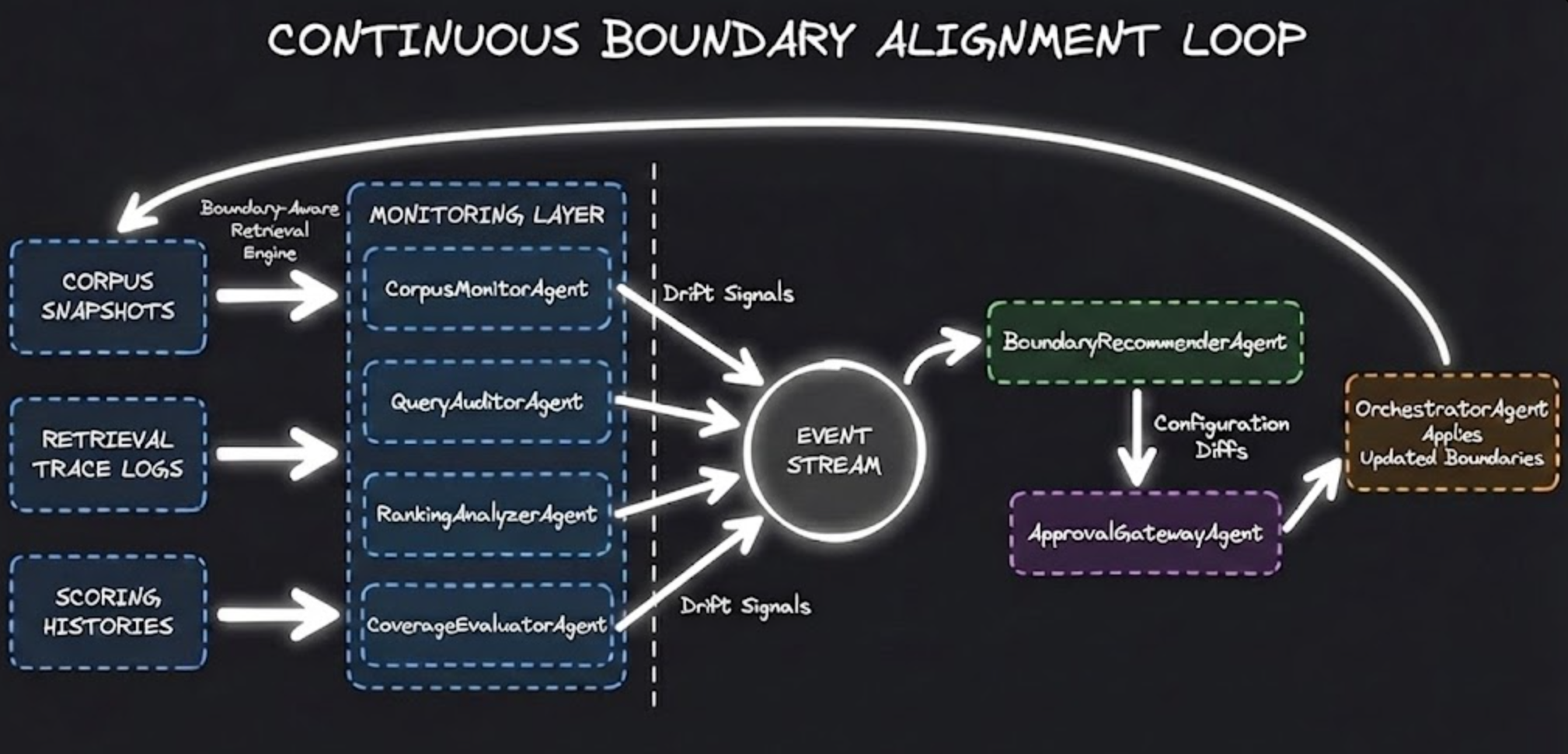

The System Built to Stay Aligned

The solution was to build an architecture that treats drift as a primary signal rather than an afterthought. Each component behaves as an observer responsible for detecting how the domain is changing and ensuring boundaries update before misalignment becomes visible.

CorpusMonitorAgent

CorpusMonitorAgent fingerprinted documents using MinHash and SimHash, tracking heading depth, clause sequencing, and template shifts. Operating over consistent corpus snapshots, it detected structural changes beyond normal variance. When more than five percent of documents adopted new patterns, it signaled that segmentation and indexing assumptions likely no longer preserved decisive text. Structural drift always precedes semantic drift.

QueryAuditorAgent

QueryAuditorAgent maintained historical retrieval maps for anchor queries. It replayed these queries on a fixed cadence and measured top-k overlap against baseline paths. A thirty-percent shift in top-5 results reliably indicated that the domain had moved beneath the system. Stable queries drifting into unfamiliar hierarchy regions are among the clearest indicators that boundaries have fallen behind.

RankingAnalyzerAgent

RankingAnalyzerAgent monitored score distributions across lexical and dense similarity channels. Rising boilerplate frequency, reappearance of deprecated phrasing, or compression of score variance signaled that the ranking logic still reflected an older linguistic regime. Ranking anomalies appear earlier than accuracy drift because they expose how the system interprets signal.

CoverageEvaluatorAgent

CoverageEvaluatorAgent assessed conceptual reach. It measured retrieval depth for decisive clauses, evaluated continuity across regulatory themes, and used topic-level embeddings to ensure top-k results still represented the domain's conceptual topology. When continuity collapsed or decisive clauses moved deeper, boundaries were no longer aligned with domain structure.

BoundaryRecommenderAgent

BoundaryRecommenderAgent transformed drift signals into auditable proposals. It generated updated chunking heuristics, recalibrated ranking weights to match the new linguistic distribution, scheduled embedding refresh cycles when conceptual clusters shifted, and recommended depth adjustments based on latency budgets. Each proposal included projected precision, coverage, and cost impacts. Drift becomes actionable only when represented as concrete boundary updates.

ApprovalGatewayAgent

ApprovalGatewayAgent enforced structured oversight. It routed proposals for review, recorded rationales, and maintained a ledger of accepted and rejected adjustments. High-stakes domains require accountability. Silent automation is not alignment.

OrchestratorAgent

OrchestratorAgent scheduled monitoring cycles, routed events, ensured consistent snapshot usage, and applied approved updates. Without orchestration, the components behave like isolated detectors. With orchestration, they form a continuous alignment loop. Integration required isolating alignment logic from the request path: agents operated over snapshots, retrieval traces, and scoring histories rather than interfering with live traffic. Engineering challenges included ensuring snapshot consistency, preventing deadlocks during re-indexing, avoiding GPU bottlenecks during embedding refresh cycles, and establishing deterministic drift-window timing so detection did not lag behind corpus changes.

How This Generalizes Beyond Regulatory Domains

Although this architecture originated in a structured compliance corpus, the principles extend to less structured and more rapidly evolving environments. In product documentation, drift manifests when conceptual clusters shift faster than embedding refresh cycles. In scientific literature, drift appears when emerging terminology disrupts established co-occurrence patterns. The same loop applies: detect structural or distributional shifts, compare against baselines, propose boundary updates, and apply only after review.

In customer support logs and call transcripts, drift shows up as new failure modes, policies, and edge-case resolutions that never existed in the original data; retrieval continues privileging the historical failure landscape. Here, the architecture focuses less on explicit headings and more on conversational templates, outcome labels, and sentiment or risk markers, but the underlying mechanism is unchanged. The system remembers old boundaries until it is explicitly instructed to let them go.

Why This Level of Automation Matters

Retrieval systems rarely fail abruptly. They fail by continuing to operate correctly against an obsolete structure. In domains with tens of thousands of evolving documents—or any environment defined by rapid iteration—boundaries lose relevance faster than intuition predicts. By the time drift appears in metrics, the misalignment has already reshaped system behavior.

Automation does not replace judgment. It preserves it. It converts structural change into telemetry, domain movement into explicit signals, and early discomfort into actionable alignment decisions. In a moving domain, the responsibility is not to tune a system once, but to keep its boundaries aligned as the world continues to shift.